Gradients for Time Scheduled Conditional Variables in Neural Differential Equations

A short derivation of the continuous adjoint equation for time scheduled conditional variables.

Introduction

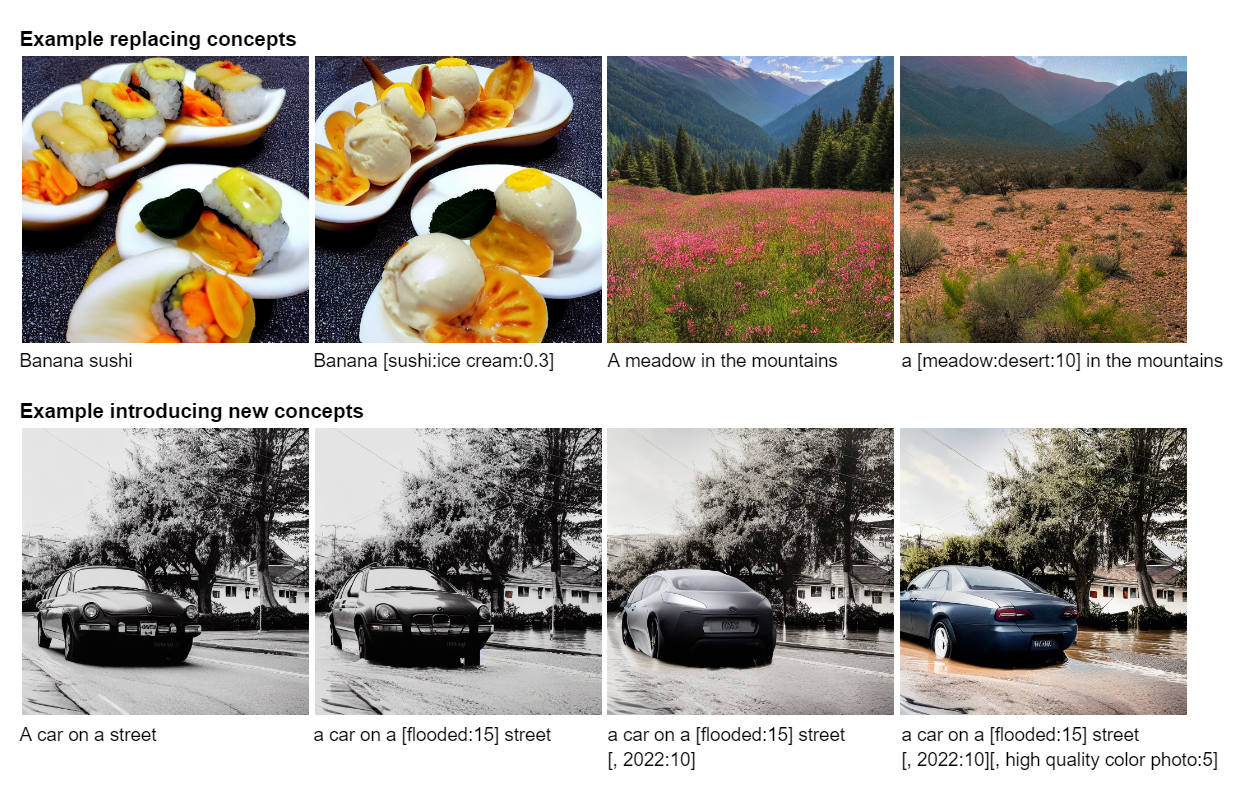

The advent of large-scale diffusion models conditioned on text embeddings

More generally, we can view this as have the conditional information (in this case text embeddings) scheduled w.r.t. time. Formally, assume we have a U-Net trained on the noise-prediction task \(\bseps_\theta(\bfx_t, \bfz, t)\) conditioned on a time scheduled text embedding \(\bfz(t)\). The sampling procedure amounts to solving the probability flow ODE from time \(T\) to time \(0\). \(\begin{equation} \frac{\rmd \bfx_t}{\rmd t} = f(t)\bfx_t + \frac{g^2(t)}{2\sigma_t}\bseps_\theta(\bfx_t, \bfz(t), t), \end{equation}\) where \(f, g\) define the drift and diffusion coefficients of a Variance Preserving (VP) type SDE

Training-free guidance

A closely related area of active research has been the development of techniques which search of the optimal generation parameters.

More specifically, they attempt to solve the following optimization problem: \(\begin{equation} \label{eq:problem_stmt_ode} \argmin_{\bfx_T, \bfz, \theta}\quad \mathcal{L}\bigg(\bfx_T + \int_T^0 f(t)\bfx_t + \frac{g^2(t)}{2\sigma_t}\bseps_\theta(\bfx_t, \bfz, t)\;\rmd t\bigg), \end{equation}\) where \(\mathcal L\) is a real-valued loss function on the output \(\bfx_0\).

Several recent works this year

Problem statement. Given \(\begin{equation} \bfx_0 = \bfx_T + \int_T^0 f(t)\bfx_t + \frac{g^2(t)}{2\sigma_t}\bseps_\theta(\bfx_t, \bfz(t), t)\;\rmd t, \end{equation}\) and \(\mathcal L (\bfx_0)\), find: \(\begin{equation} \frac {\partial \mathcal L}{\partial \bfz(t)}, \qquad t \in [0,T]. \end{equation}\)

In an earlier blog post we showed how to find \(\partial L / \partial \bfz\) by solving the continuous adjoint equations. How do the continuous adjoint equations change with replacing \(\bfz\) with time scheduled \(\bfz(t)\) in the sampling equation? What we will now show is that

We can just simply replace \(\bfz\) with \(\bfz(t)\) in the continuous adjoint equations.

This result will intuitive, does require some technical details to show.

Gradients of time-scheduled conditional variables

It is well known that diffusion models are just a special type of neural differential equation, either a neural ODE or SDE. As such we will show this result holds more generally for neural ODEs.

Theorem (Continuous adjoint equations for time scheduled conditional variables). Suppose there exists a function \(\bfz: [0,T] \to \R^z\) which can be defined as a càdlàg

Why càdlàg?

In practice \(\bfz(t)\) is often a discrete set \(\{\bfz_k\}_{k=1}^n\) where \(n\) corresponds to the number of discretization steps the numerical ODE solver takes. While the proof is easier for a continuously differentiable function \(\bfz(t)\) we opt for this construction for the sake of generality. We choose a càdlàg piecewise function, a relatively mild assumption, to ensure that the we can define the augmented state on each continuous interval of the piecewise function in terms of the right derivative.

In the remainder of this blog post will provide the proof of this result. Our proof technique is an extension of the one used by Patrick Kidger (Appendix C.3.1)

Proof. Recall that \(\bfz(t)\) is a piecewise function of time with partition of the time domain \(\Pi\). Without loss of generality we consider some time interval \(\pi = [t_{m-1}, t_m]\) for some \(1 \leq m \leq n\). Consider the augmented state defined on the interval \(\pi\): \(\begin{equation} \frac{\rmd}{\rmd t} \begin{bmatrix} \bfy\\ \bfz \end{bmatrix}(t) = \bsf_{\text{aug}} = \begin{bmatrix} \bsf_\theta(\bfy_t, \bfz_t, t)\\ \overrightarrow\partial\bfz(t) \end{bmatrix}, \end{equation}\) where \(\overrightarrow\partial\bfz(t): [0,T] \to \R^z\) denotes the right derivative of \(\bfz\) at time \(t\). Let \(\bfa_\text{aug}\) denote the augmented state as \(\begin{equation} \label{eq:app:adjoint_aug} \bfa_\text{aug}(t) := \begin{bmatrix} \bfa_\bfy\\\bfa_\bfz \end{bmatrix}(t). \end{equation}\) Then the Jacobian of \(\bsf_\text{aug}\) is defined as \(\begin{equation} \label{eq:app:jacobian_aug} \frac{\partial \bsf_\text{aug}}{\partial [\bfy, \bfz]} = \begin{bmatrix} \frac{\partial \bsf_\theta(\bfy, \bfz, t)}{\partial \bfy} & \frac{\partial \bsf_\theta(\bfy, \bfz, t)}{\partial \bfz}\\ \mathbf 0 & \mathbf 0\\ \end{bmatrix}. \end{equation}\) As the state \(\bfz(t)\) evolves with \(\overrightarrow\partial\bfz(t)\) on the interval \([t_{m-1}, t_m]\) in the forward direction the derivative of this augmented vector field w.r.t. \(\bfz\) is clearly \(\mathbf 0\) as it only depends on time. Remark, as the bottom row of the Jacobian of \(\bsf_\text{aug}\) is all \(\mathbf 0\) and \(\bsf_\theta\) is continuous in \(t\) we can consider the evolution of \(\bfa_\text{aug}\) over the whole interval \([0,T]\) rather than just a partition of it. The evolution of the augmented adjoint state on \([0,T]\) is then given as \(\begin{equation} \frac{\rmd \bfa_\text{aug}}{\rmd t}(t) = -\begin{bmatrix} \bfa_\bfy & \bfa_\bfz \end{bmatrix}(t) \frac{\partial \bsf_\text{aug}}{\partial [\bfy, \bfz]}(t). \end{equation}\) Therefore, $\bfa_\bfz(t)$ is a solution to the initial value problem: \(\begin{equation} \bfa_\bfz(T) = 0, \qquad \frac{\rmd \bfa_\bfz}{\rmd t}(t) = -\bfa_\bfy(t)^\top \frac{\partial \bsf_\theta(\bfy(t), \bfz(t), t)}{\partial \bfz(t)}. \end{equation}\)

Next we show that there exist a unique solution to the initial value problem. Now as \(\bfy\) is continuous and \(\bsf_\theta\) is continuously differentiable in \(\bfy\) it follows that \(t \mapsto \frac{\partial \bsf_\theta}{\partial \bfy}(\bfy(t), \bfz(t), t)\) is a continuous function on the compact set \([t_{m-1}, t_m]\). As such it is bounded by some \(L > 0\). Likewise, for \(\bfa_\bfy \in \R^d\) the map \((t, \bfa_\bfy) \mapsto -\bfa_\bfy \frac{\partial \bsf_\theta}{\partial [\bfy, \bfz]}(\bfy(t), \bfz(t), t)\) is Lipschitz in \(\bfa_\bfy\) with Lipschitz constant \(L\) and this constant is independent of \(t\). Therefore, by the Picard-Lindelöf theorem the solution \(\bfa_\text{aug}(t)\) exists and is unique.

If you found this useful and would like to cite this post in academic context, please cite this as:

Blasingame, Zander W. (Dec 2024). Gradients for Time Scheduled Conditional Variables in Neural Differential Equations. https://zblasingame.github.io.

or as a BibTeX entry:

@article{blasingame2024gradients-for-time-scheduled-conditional-variables-in-neural-differential-equations,

title = {Gradients for Time Scheduled Conditional Variables in Neural Differential Equations},

author = {Blasingame, Zander W.},

year = {2024},

month = {Dec},

url = {https://zblasingame.github.io/blog/2024/cadlag-conditional/}

}