Face Morphing with Diffusion Models

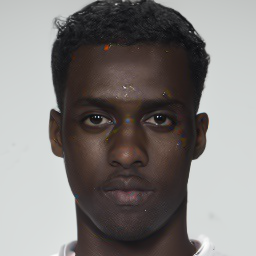

This blog introduces a new family of face morphing attacks known as Difusion Morphs (DiM). DiMs are a novel method for constructing morphed faces which exploit the iterative nature of diffusion models to construct face morphs which are more effective and more realistic in appearance. DiMs achieve state-of-the-art morphing performance and visual fidelity, far surpassing previous methods. In this blog post I will detail the intution, basic concepts, and applications of DiMs.

Introduction

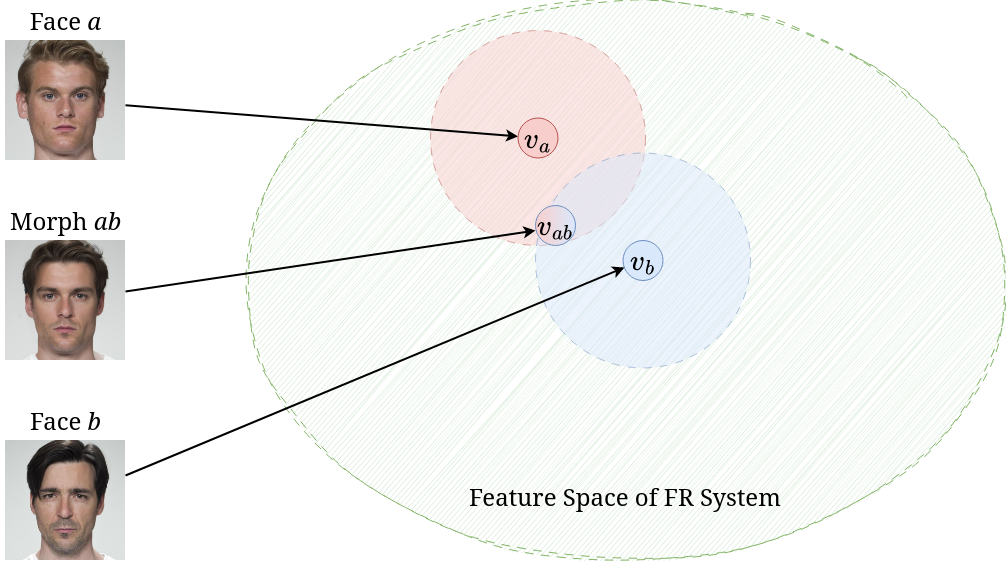

Face morphing is a kind of attack wherein the attacker attempts to combine the faces of two real identities into one face. This attack exploits the structure of the embedding space of the Face Recognition (FR) system

Face morphing attacks fall broadly into two categories.

-

Landmark-based attacks create morphed images by warping and aligning facial landmarks of the two face images before performing a pixel-wise average to obtain the morph

. -

Representation-based attacks create morphs by first embedding the original images into a representational space, once embedded into this space an interpolation between the two embeddings is constructed to a morphed representation. This morph representation is then projected back into the image space to created the morphed image

.

Historically, representation-based morphs have been created using Generative Adversarial Networks (GANs)

Landmark-based attacks are simple and surprisingly effective

In this blog post, I introduce a novel family of representation-based attacks collectively known as Difusion Morphs (DiM) which addresses both the issues of prominent visual artefacts and inability to adequately fool an FR system. The key idea is to use a type of iterative generative model known as diffusion models or score-based models

Diffusion

Diffusion models start with a diffusion process which perturbs the original data distribution \(p(\bfx)\) on same subset of Euclidean space \(\X \subseteq \R^n\) into isotropic Gaussian noise \(\mathcal{N}(\mathbf 0, \mathbf I)\). This process can be modeled with an Itô Stochastic Differential Equation (SDE) of the form \begin{equation} \mathrm{d}\bfx_t = f(t)\bfx_t\; \mathrm dt + g(t)\; \mathrm d\mathbf{w}_t \end{equation} where \(f, g\) are real-valued functions, \(\{\bfw_t\}_{t \in [0, T]}\) is the standard Wiener process on time \([0, T]\), and \(\mathrm d\bfw_t\) can be thought of as infinitesimal white noise. The drift coefficient \(f(t)\bfx_t\) is the deterministic part of the SDE and \(f(t)\bfx_t\;\mathrm dt\) can be thought of as the ODE term of the SDE. Conversely, the diffusion coefficient \(g(t)\) is the stochastic part of the SDE which controls how much noise is injected into the system. We can think of \(g(t)\;\mathrm d\bfw_t\) as the control term of the SDE.

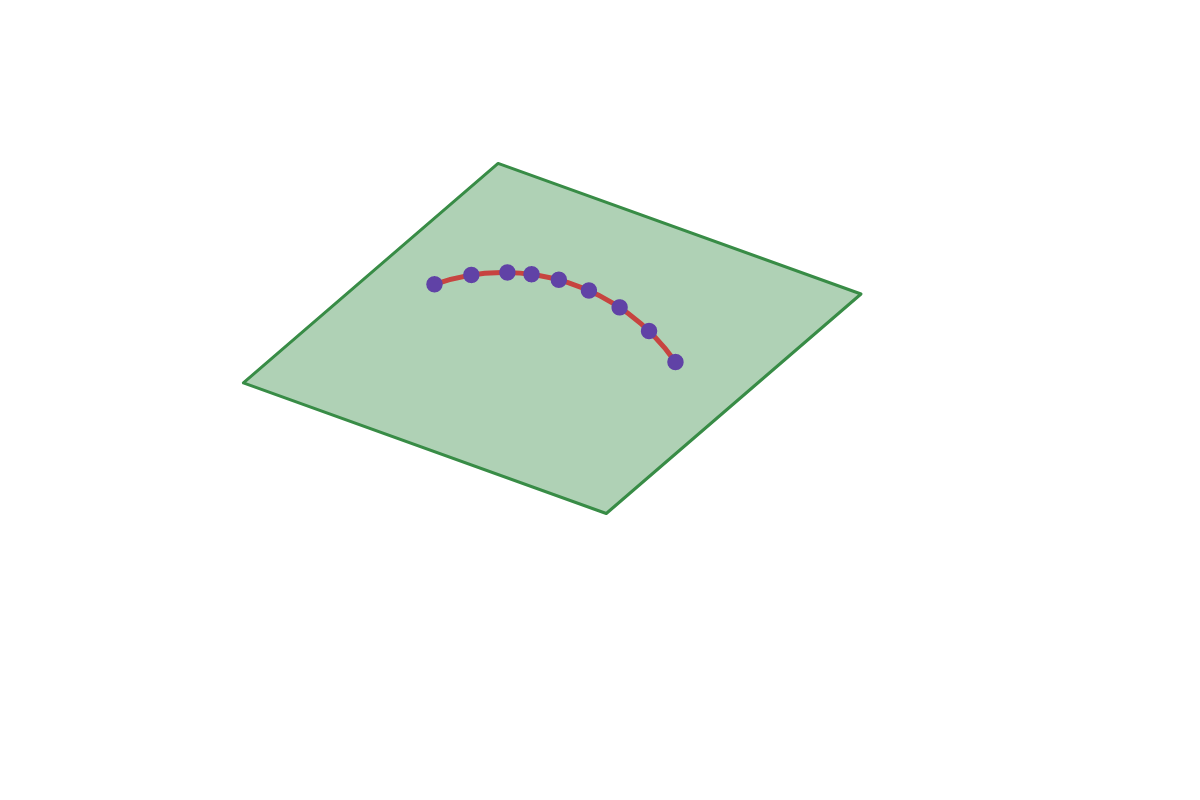

The solution to this SDE is a continuous collection of random variables \(\{\bfx_t\}_{t \in [0, T]}\) over the real interval \([0, T]\), these random variables trace stochastic trajectories over the time interval. Let \(p_t(\bfx_t)\) denote the marginal probability density function of \(\bfx_t\). Then \(p_0(\bfx_0) = p(\bfx)\) is the data distribution, likewise, for some sufficiently large \(T \in \R\) the terminal distribution \(p_T(\bfx_T)\) is close to some tractable noise distribution \(\pi(\bfx)\), called the prior distribution.

Reversing the diffusion SDE

So far we have only covered how to destroy data by perturbing it with white noise, however, for sampling we need to be able reverse this process to create data from noise. Remarkably, Anderson

Probability Flow ODE

Song et al.

DiM

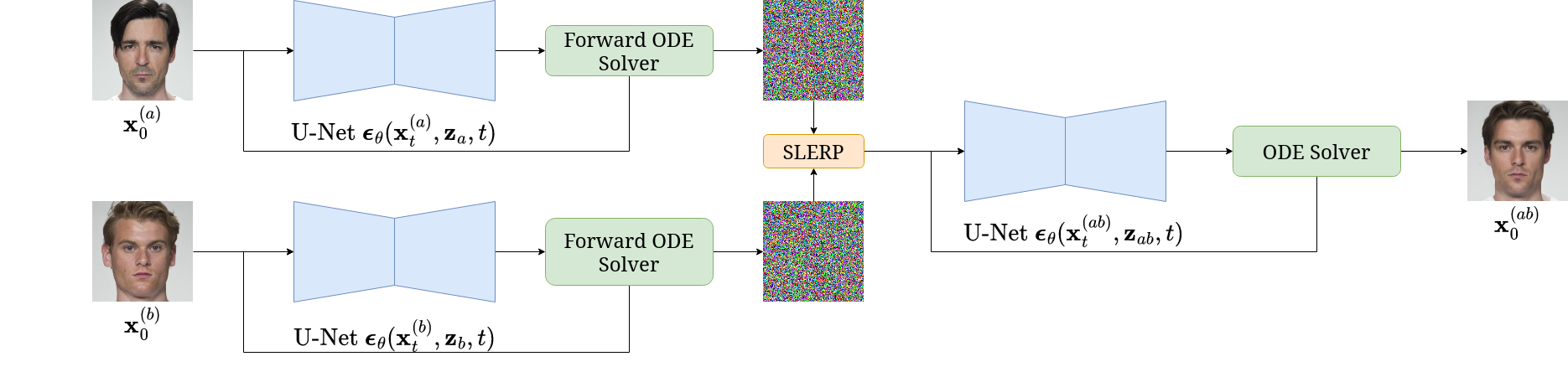

Difusion Morphs (DiM) are a novel kind of face morphing algorithm which solve the Probability Flow ODE both forwards and backwards in time to achieve state-of-the-art visual fidelity and morphing performance far surpassing previous representation-based morphing attacks. The DiM framework could use many different diffusion backbones like DDPM

Before discussing the DiM pipeline let’s establish some nomenclature. Let \(\bfx_0^{(a)}\) and \(\bfx_0^{(b)}\) denote two bona fide images of identities \(a\) and \(b\). Let \(\Z\) denote the latent vector space and let \(\bfz_a = E(\bfx_0^{(a)})\) denote the latent representation of \(a\) and likewise for \(\bfz_b\) where \(E: \X \to \Z\) is an encoding network

The DiM pipeline works by first solving the Probability Flow ODE as time flows with \(N_F \in \N\) timesteps forwards for both identities, i.e., \begin{equation} \bfx_T^{(\{a, b\})} = \Phi(\bfx_0^{(\{a, b\})}, \bfz_{\{a, b\}}, \mathbf{h}_\theta, \{t_n\}_{n=1}^{N_F}) \end{equation} to find the noisy representations of the original images. We then morph both the noisy and latent representations by a factor of \(\gamma = 0.5\) to find \begin{equation} \bfx_T^{(ab)} = \mathrm{slerp}(\bfx_T^{(a)}, \bfx_T^{(b)}; \gamma) \qquad \bfz_{ab} = \mathrm{lerp}(\bfz_a, \bfz_b; \gamma) \end{equation} Then we solve the Probability Flow ODE as time runs backwards with \(N \in \N\) timesteps \(\{\tilde t_n\}_{n=1}^N\) where \(\tilde t_1 = T\) such that \begin{equation} \bfx_0^{(ab)} = \Phi(\bfx_T^{(ab)}, \bfz_{ab}, \mathbf{h}_\theta, \{\tilde t_n\}_{n=1}^{N}) \end{equation}

All these equations for the DiM can be summarized with the following PyTorch pseudo code.

def dim(model, encoder, diff_eq, ode_solver, x_a, x_b, eps=0.002, T=80., n_encode=250, n_sample=100):

"""

DiM algorithm.

Args:

model (nn.Module): Noise prediction U-Net (or x-prediction / v-prediction).

encoder (nn.Module): Encoder network.

diff_eq (function): RHS of Probabilty Flow ODE.

ode_solver (function): Numerical ODE solver, e.g., RK-4, Adams-Bashforth.

x_a (torch.Tensor): Image of identity a.

x_b (torch.Tensor): Image of identity b.

eps (float): Starting timstep. Defaults to 0.002. For numeric stability.

T (float): Terminal timestep. Defaults to 80.

n_encode (int): Number of encoding steps. Defaults to 250.

n_sample (int): Number of sampling steps. Defaults to 100.

Returns:

x_morph (torch.Tensor): The morphed image.

"""

# Create latents

z_a = encoder(x_a)

z_b = encoder(x_b)

# Encode images into noise

timesteps = torch.linspace(eps, T, n_encode)

xs = ode_solver(torch.cat((x_a, x_b), dim=1), torch.cat((z_a, z_b), dim=1), diff_eq, timesteps)

x_a, x_b = xs.chunk(2, dim=1)

# Morph representations

z_ab = torch.lerp(z_a, z_b, 0.5)

x_ab = slerp(x_a, x_b, 0.5) # assumes slerp is defined somewhere

# Generate morph

timesteps = torch.linspace(T, eps, n_sample)

x_morph = ode_solver(x_ab, z_ab, diff_eq, timesteps)

return x_morph

A few important observations.

- The number of encoding steps \(N_F\) does not need to equal to the number of sampling steps \(N\), i.e., these process are decoupled.

- We can use separate numerical ODE solvers for encoding and sampling.

- Samples are iteratively generated, meaning there are more ways to guide the morph generation process

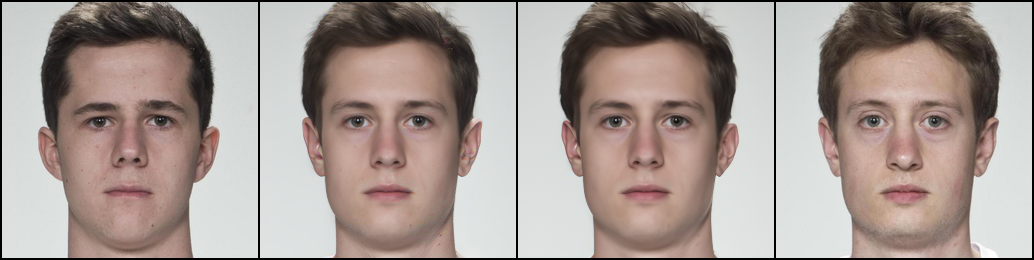

By using the powerful and flexible framework of diffusion models we can achieve state-of-the-art visual fidelity and morphing performance (measured in MMPMR

| Morphing Attack | AdaFace | ArcFace | ElasticFace |

|---|---|---|---|

| FaceMorpher | 89.78 | 87.73 | 89.57 |

| OpenCV | 94.48 | 92.43 | 94.27 |

| MIPGAN-I | 72.19 | 77.51 | 66.46 |

| MIPGAN-II | 70.55 | 72.19 | 65.24 |

| DiM | 92.23 | 90.18 | 93.05 |

High-order ODE solvers

The Probability Flow ODE in Equation \eqref{eq:empirical_pf_ode} can be transformed from a stiff ODE into a semi-linear ODE by using exponential integrators. Lu et al.

Previously, DiM used DDIM a first-order ODE solver of the form \begin{equation} \bfx_{t_n} = \frac{\alpha_{t_n}}{\alpha_{t_{n-1}}}\bfx_{t_{n-1}} - \sigma_{t_n}(e^{h_n} - 1)\boldsymbol\epsilon_\theta(\bfx_{t_{n-1}}, \bfz, t_{n-1}) \end{equation} where \(h_n = \lambda_{t_n} - \lambda_{t_{n-1}}\) is the step size of the ODE solver. This, however, means that we have a global truncation error of \(\mathcal{O}(h)\) (Theorem 3.2 in

By using a second-order multistep ODE solver DPM++ 2M

| ODE Solver | NFE (↓) | AdaFace (MMPMR ↑) | ArcFace (MMPMR ↑) | ElasticFace (MMPMR ↑) |

|---|---|---|---|---|

| DDIM | 100 | 92.23 | 90.18 | 93.05 |

| DPM++ 2M | 50 | 92.02 | 90.18 | 93.05 |

| DPM++ 2M | 20 | 91.26 | 89.98 | 93.25 |

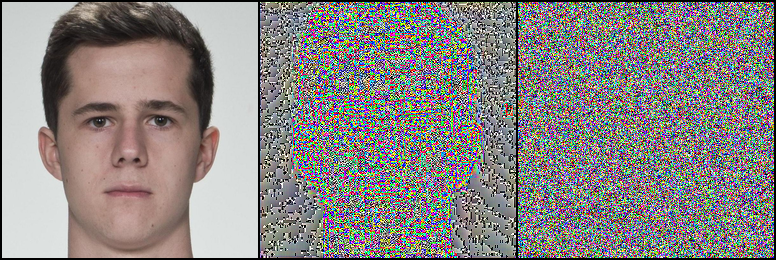

While we have shown that by solving the Probability Flow ODE as time runs backwards with high-order solves we can significantly reduce the NFE with no downside, we have yet to explore the impact on the encoding process. Interestingly, we discovered the success of DiM morphs is highly sensitive to the accuracy of the encoding process. Merely, dropping the number of encoding steps from 250 to 100 showed a marked decline in performance; conversely, increasing sampling steps over 100 showed no benefit. We posit that this might be due to the odd nature of the encoded images.

| ODE Solver | NFE (↓) | AdaFace (MMPMR ↑) | ArcFace (MMPMR ↑) | ElasticFace (MMPMR ↑) |

|---|---|---|---|---|

| DDIM | 100 | 91.82 | 88.75 | 91.21 |

| DPM++ 2M | 100 | 90.59 | 87.15 | 90.8 |

| DDIM | 50 | 89.78 | 86.3 | 89.37 |

| DPM++ 2M | 50 | 90.18 | 86.5 | 88.96 |

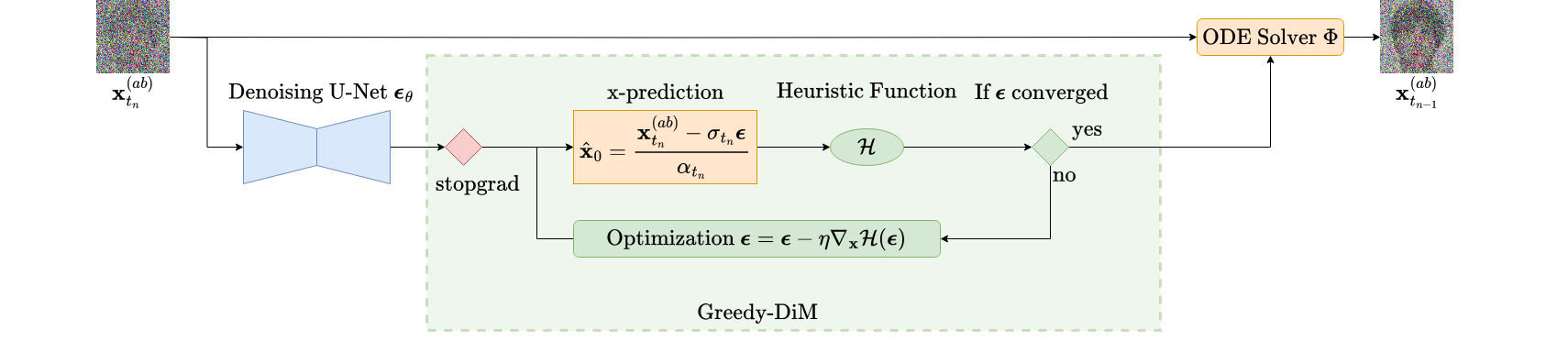

Greedy Guided Generation

Identity-guided generation massively improved the effectiveness of GAN-based morphs

We don’t actually care about the intermediate latents \(\bfx_T^{(ab)}, \bfz_{ab}\) just the output \(\bfx_0^{(ab)}\)

With this insight we instead propose a greedy strategy that optimizes each step of the ODE solver \(\Phi\) w.r.t. to the identity loss. We propose a new family of DiM algorithms called Greedy-DiM which leverages this greedy strategy to generate optimal morphs.

| DiM | Fast-DiM | Morph-PIPE | Greedy-DiM | |

|---|---|---|---|---|

| ODE Solver | DDIM | DPM++ 2M | DDIM | DDIM |

| Number of sampling steps | 100 | 50 | 2100 | 20 |

| Heuristic function | ✘ | ✘ | \(\mathcal{L}_{ID}^*\) | \(\mathcal{L}_{ID}^*\) |

| Search strategy | ✘ | ✘ | Brute-force search | Greedy optimization |

| Search space | ✘ | ✘ | Set of 21 morphs | \(\X\) |

| Probability the search space contains the optimal solution | ✘ | ✘ | 0 | 1 |

To further motivate Greedy-DiM we highlight recent work by Zhang et al.

- Find latent representations \(\bfx_T^{(a)},\bfx_T^{(b)},\bfz_a,\bfz_b\).

- Create a set of \(N = 21\) blend parameters \(\{\gamma_n\}_{n=1}^N \subseteq [0, 1]\).

- Generate \(N\) morphs with latents \(\mathrm{slerp}(\bfx_T^{(a)},\bfx_T^{(b)};\gamma_n)\) and \(\mathrm{lerp}(\bfz_a, \bfz_b; \gamma_n)\).

- Select the best morph w.r.t. the identity loss \(\mathcal{L}_{ID}^*\)

The identity loss was initially proposed by Zhang et al. .and was slightly modified by defined as the sum of two sub-losses: $$\mathcal{L}_{ID} = d(v_{ab}, v_a) + d(v_{ab}, v_b)\qquad \mathcal{L}_{diff} = \big|d(v_{ab}, v_a) - d(v_{ab}, v_b))\big |$$ with $$\mathcal{L}_{ID}^* = \mathcal{L}_{ID} + \mathcal{L}_{diff}\label{eq:loss_id}$$ where $v_a = F(\mathbf{x}_0^{(a)}), v_b = F(\mathbf{x}_0^{(b)}), v_{ab} = F(\mathbf{x}_0^{(ab)})$, and $F: \mathcal{X} \to V$ is an FR system which embeds images into a vector space $V$ which is equipped with a measure of distance, $d$, e.g. cosine distance.

While Morph-PIPE does outperform DiM it, however, does have a few notable drawbacks.

- The approach is very computationally expensive requiring the user to fully generate a set of \(N = 21\) morphs.

- The probability that Morph-PIPE actually finds the optimal morph is 0, even as \(N \to \infty\). More on this later.

In contrast our Greedy-DiM algorithm addresses both of these issues. First, by greedily optimizing a neighborhood around the predicted noise at each step of the ODE solver we reduce significantly reduce the number of calls to the U-Net while simultaneously expanding the size of the search space.

Remember that for VP type SDEs a sample at time \(t\) can be expressed as \(\bfx_t = \alpha_t\bfx_0 + \sigma_t\boldsymbol\epsilon_t\), this means we can rearrange the equation to solve for the denoised image \(\bfx_0\) to which we find \begin{equation} \label{eq:x-pred} \bfx_0 = \frac{\bfx_t - \sigma_t\boldsymbol\epsilon_t}{\alpha_t} \end{equation} Therefore, we recast our noise prediction from \(\boldsymbol\epsilon_\theta(\bfx_t, \bfz, t)\) into a prediction of \(\bfx_0\). It is this approximation which we then pass to the identity loss \(\mathcal{L}_{ID}^*\). We can then easily calculate \(\nabla_{\boldsymbol\epsilon} \mathcal{L}_{ID}^*\) using an automatic differentation library and preform gradient descent on \(\boldsymbol\epsilon\) to find the optimal \(\boldsymbol\epsilon\) w.r.t. the identity loss. We outline the Greedy-DiM algorithm with the following PyTorch pseudo code.

def dim(model, encoder, diff_eq, ode_solver, x_a, x_b, loss_func, eps=0.002, T=80., n_encode=250, n_sample=20, n_opt_steps=50, opt_kwargs={}):

"""

Greedy-DiM algorithm.

Args:

model (nn.Module): Noise prediction U-Net (or x-prediction / v-prediction).

encoder (nn.Module): Encoder network.

diff_eq (function): RHS of Probabilty Flow ODE.

ode_solver (function): Numerical ODE solver, must be first-order!

x_a (torch.Tensor): Image of identity a.

x_b (torch.Tensor): Image of identity b.

loss_fn (func): Loss function to guide generation.

eps (float): Starting timstep. Defaults to 0.002. For numeric stability.

T (float): Terminal timestep. Defaults to 80.

n_encode (int): Number of encoding steps. Defaults to 250.

n_sample (int): Number of sampling steps. Defaults to 100.

n_opt_steps (int): Number of optimization steps per timestep of the ODE solver. Defaults to 50.

opt_kwargs (dict): Dictionary of optimizer arguments. Defaults to {}.

Returns:

x_morph (torch.Tensor): The morphed image.

"""

# Create latents

z_a = encoder(x_a)

z_b = encoder(x_b)

# Encode images into noise

timesteps = torch.linspace(eps, T, n_encode)

xs = ode_solver(torch.cat((x_a, x_b), dim=1), torch.cat((z_a, z_b), dim=1), diff_eq, timesteps)

x_a, x_b = xs.chunk(2, dim=1)

# Morph representations

z = torch.lerp(z_a, z_b, 0.5)

xt = slerp(x_a, x_b, 0.5) # assumes slerp is defined somewhere

# Generate morph

timesteps = torch.linspace(T, eps, n_sample)

# Perform Greedy Optimization

for i, t in enumerate(timesteps[:-1]):

with torch.no_grad():

eps = model(xt, z, t)

eps = eps.detach().clone().requires_grad(True)

opt = torch.optim.RAdam([eps], **opt_kwargs)

x0_pred = convert_eps_to_x0(eps, t, xt.detach()) # assumes Eq. (13) is implemented somewhere

best_loss = loss_fn(x0_pred)

best_eps = eps

# Gradient descent on epsilon to find epsilon*

for _ in range(n_opt_steps):

opt.zero_grad()

x0_pred = convert_eps_to_x0(eps, t, xt.detach())

loss = loss_fn(x0_pred)

do_update = (loss < best_loss).float() # handles batches of morphs

best_loss = do_update * loss + (1. - do_update) * best_loss

best_eps = do_update[:, None, None, None] * eps + (1. - do_update)[:, None, None, None] * best_eps

loss.mean().backward()

opt.step()

eps = best_eps

# Use the approximated epsilon* to take the next step of the ode solver

xt = ode_solver(xt, z, diff_eq, [t, timesteps[i+1]])

return xt

This simple greedy strategy results in massive improvements over DiM in both visual fidelity and morphing performance. The performance of Greedy-DiM is unreasonably effective fooling the studied FR systems a 100% of the time.

| Morphing Attack | NFE (↓) | AdaFace (↑) | ArcFace (↑) | ElasticFace (↑) |

|---|---|---|---|---|

| FaceMorpher | - | 89.78 | 87.73 | 89.57 |

| OpenCV | - | 94.48 | 92.43 | 94.27 |

| MIPGAN-I | - | 72.19 | 77.51 | 66.46 |

| MIPGAN-II | - | 70.55 | 72.19 | 65.24 |

| DiM | 350 | 92.23 | 90.18 | 93.05 |

| Fast-DiM | 300 | 92.02 | 90.18 | 93.05 |

| Morph-PIPE | 2350 | 95.91 | 92.84 | 95.3 |

| Greedy-DiM | 270 | 100 | 100 | 100 |

Given the unreasonably strong empirical performance a natural question is to ask why Greedy-DiM performs so well. First, this greedy strategy is globally optimal (Theorem 3.1

The search space of Greedy-DiM is well-posed

The search space of Greedy-DiM is well-posed, meaning the probability the search space contains the optimal solution is 1. Formally, let \(\pr\) be probability measure on compact subset \(\X\) which denotes the distribution of the optimal face morph. For technical reasons we also assume \(\pr\) is absolutely continuous

Intuitively, the \(N = 21\) countably finite number of morphs that Morph-PIPE explores is infinitesimally small compared to the whole search space which is compact subset of Euclidean space.

The search space of Greedy-DiM allows it to find the optimal morph, while Morph-PIPE’s forbids this

Due to this ability of Greedy-DiM to better explore the space of morphs we can find the optimal morph. This combined with globally optimal substructure of the identity-guided DiM problem gives a reasonable explanation for why Greedy-DiM fooled the studied FR systems 100% of the time.

Note, the reason the numerical ODE solver \(\Phi\) must be first-order is that our greedy strategy breaks the guarantees needed for to accurately estimate the \(n\)-th order derivatives used in high-order solvers.

Concluding Remarks

This blog post gives a detailed introduction to DiM. I develop the motivation for DiM, and it’s successors Fast-DiM and Greedy-DiM, through a principled analysis of the face morphing problem. I was then able to demonstrate that this new family of morphing attacks pose a serious threat to FR systems and represent a significant advance in face morphing attacks. This post is a compilation of several papers we have published in the last few years. Please visit them if you are interested in more details.

- Zander W. Blasingame and Chen Liu. Greedy-DiM: Greedy Algorithms for Unreasonably Effective Face Morphs. IJCB 2024

- Zander W. Blasingame and Chen Liu. Leveraging Diffusion for Strong and High Quality Face Morphing Attacks. IEEE TBIOM 2024.

- Zander W. Blasingame and Chen Liu. Fast-DiM: Towards Fast Diffusion Morphs. IEEE Security & Privacy 2024.

- Richard E. Neddo, Zander W. Blasingame, and Chen Liu. The Impact of Print-and-Scan in Heterogeneous Morph Evaluation Scenarios. IJCB 2024

For more reading on more efficiently estimating the gradients of diffusion models please check out our recent paper.

Interestingly, our work on Greedy-DiM bares some similarity to recent work done by Yu et al.

It is my belief that the insights gained here are not only relevant to face morphing, but also to other downstream task with diffusion models. E.g., template inversion

If you found this useful and would like to cite this post in academic context, please cite this as:

Blasingame, Zander W. (Aug 2024). Face Morphing with Diffusion Models. https://zblasingame.github.io.

or as a BibTeX entry:

@article{blasingame2024face-morphing-with-diffusion-models,

title = {Face Morphing with Diffusion Models},

author = {Blasingame, Zander W.},

year = {2024},

month = {Aug},

url = {https://zblasingame.github.io/blog/2024/face-morphing-dim/}

}